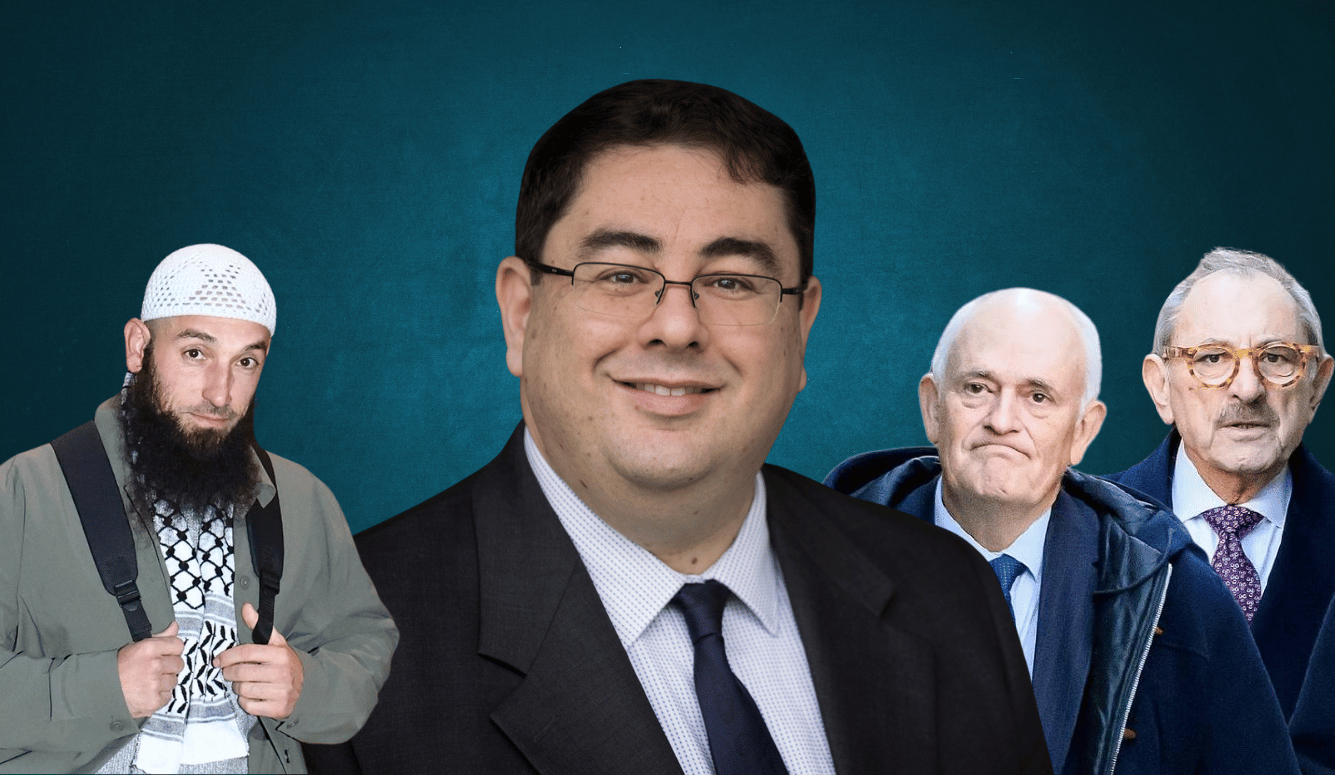

Can We Regulate Online Hate Without Killing Free Speech? With Dr Andre Oboler | Quillette Cetera Ep. 50

As antisemitism surges online and in the streets, Dr Andre Oboler joins Zoe to explore what’s fuelling the hate, from conspiracy thinking to political ideology—and how to stop it. By Zoe Booth

In this episode, Dr Andre Oboler—CEO of the Online Hate Prevention Institute and a global expert on antisemitism, hate speech, and online extremism—joins Zoe Booth to unpack the surge in antisemitic hate speech since 7 October 2023.

Dr Oboler discusses recent antisemitic attacks on Australian synagogues, and his role as an expert witness in the landmark Wissam Haddad hate speech case, which tested the limits of Australia’s racial vilification laws. He critiques the failure of major social media platforms to moderate digital hate, and outlines the legal, educational, and community responses needed to address the rise in online antisemitism.

The conversation also tackles a difficult but essential question: How do we protect free speech while holding people accountable for inciting hatred and violence online?

Transcript

ZB: Could you tell our audience a little bit about what it is that the Online Hate Prevention Institute does?

AO: Okay. We were set up in January 2012, so we’ve been around for quite a while now. Our mission is to prevent harm to people as a result of online hate, and that extends from hate speech all the way through to extremism. At the really pointy end, we’ve done things like taking down manifestos and live-streaming videos from actual terrorist attacks—within hours of them occurring.

ZB: So when you say harm, you mean physical harm?

AO: It can be physical, it can be mental, emotional, et cetera. It’s the full gamut of psychological harms as well. We were set up as a harm prevention charity, which is a very particular type of charity under Australian law. The legislation basically defines the types of harm that harm prevention charities are meant to address. That ranges from suicide prevention, self-harm, and problem gambling—though that’s not usually relevant to us—through to bullying, harassment, physical assault. All of that can, in our case, be triggered by online content.

ZB: What are the main types of harm that you see?

AO: We deal with a lot of different types. When we first started, one of our early projects in 2012 related to racism against Indigenous Australians. There was a huge number of Facebook pages—driven by a small group of people—filled with “Aboriginal memes” that were deeply racist. They referenced things like people in remote areas sniffing petrol fumes—mocking a real health crisis—and included welfare-related slurs and commentary. The Human Rights Commission was responding, but we did the legwork, documenting the pages. Some of them were taken down, but others kept being re-uploaded—often in ways that weren’t immediately obvious as new pages. So it looked like nothing was being done. We produced a report.

Keep reading with a 7-day free trial

Subscribe to Quillette’s Substack to keep reading this post and get 7 days of free access to the full post archives.